Code Processing

Overview

MightyMeld processes your code using a system we call "the scribe". You can read more about the MightyMeld Scribe in the MightyMeld architectural overview.

Default Configuration

By default, the MightyMeld Scribe is hosted on our servers, so the code is processed remotely. The include and exclude config options specify which files are sent to MightyMeld.

{

"run": "npm run start",

"include": ["src", ".prettierrc.json"],

"exclude": ["node_modules", "__tests__", "secrets"],

"run_prettier": true,

"web_server_url": "http://localhost:3000",

"editor": "url:cursor://file/%FILENAME:%LINE:%COLUMN"

}

Your code is associated with an instance. When you first run npx mightymeld, after authenticating,

your repo will be associated with an instance. Then, an appropriate mightymeld.secrets file will

be downloaded to your development machine.

You can run npx mightymeld secrets to choose a new instance and generate a new

mightymeld.secrets file.

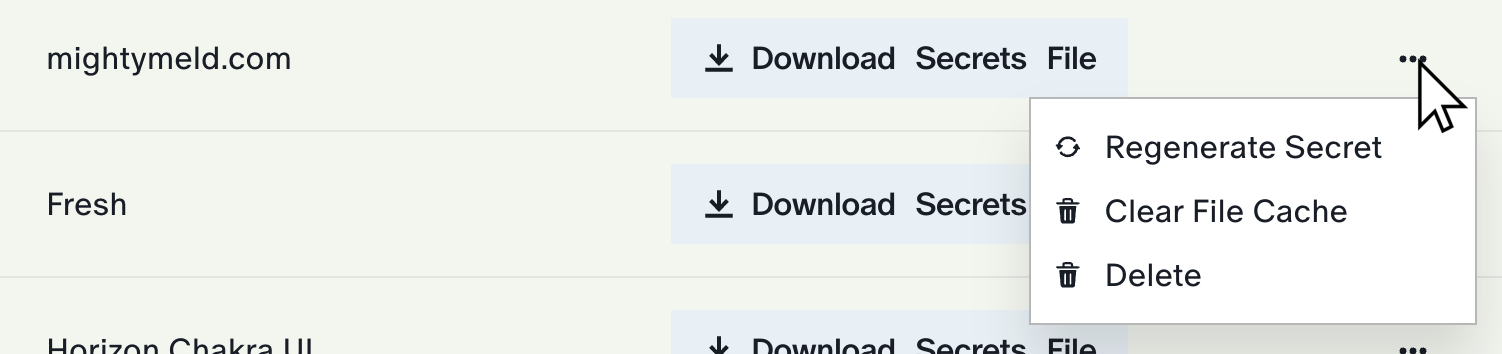

Visit https://mightymeld.app/instances to manage your instances. From there, you can manage your secrets and force-clear the file cache.

Local Code Processing

You can also configure MightyMeld so that your code never leaves your development environment. This allows MightyMeld to be used by teams whose policies forbid code egress (often for compliance reasons). This functionality is currently only available to select developers. Please email us with some information about your project if you would like access.

In local mode, the MightyMeld Scribe runs in your development environment (your laptop, or development container). Therefore, all code processing is performed locally.

If AI is enabled, the scribe running in your development environment sends code to a LLM as you use AI functionality, as described below.

LLM Utilization

When you use AI functionality, your code is sent to an LLM (currently OpenAI) for processing. The code sent is limited to files that have been included but not excluded.

If you'd like to disable AI, consult the

generative_ai setting.